[Paper Review] SS-GAN: Self-Supervised GANs via Auxiliary Rotation Loss 간단한 논문 리뷰

업데이트:

-

Paper : SS-GAN: Self-Supervised GANs via Auxiliary Rotation Loss (CVPR 2019)

- Conditional GAN은 안정적이고 학습이 쉽지만, label이 꼭 필요

⭐ Unsupervised Generative Model that combines adversarial training with self-supervised learning

- SS-GAN : GAN에 self-supervised learning을 거의 처음으로 적용한 논문

- SS-GAN은 labeled data가 없어도 conditional GAN의 이점을 가짐

D에 auxiliary, self-supervised loss를 추가하여 학습이 stable + useful 하도록 함.- natural image synthesis에서 self-supervised GAN은 label이 없어도 label이 있는 것과 비슷하게 학습이 됨

The Self-Supervised GAN

- The main idea behind self-supervision is to train a model on a pretext task like predicting rotation angle or relativelocation of an image patch, and then extracting representations from the resulting networks

- 본 논문은 SOTA self-supervision method 중 하나인 Image Rotation를 GAN에 적용

- rotation-based loss로

D를 augment !

Loss Function

- Original GAN Loss

- Original GAN Loss + Rotation-based Loss

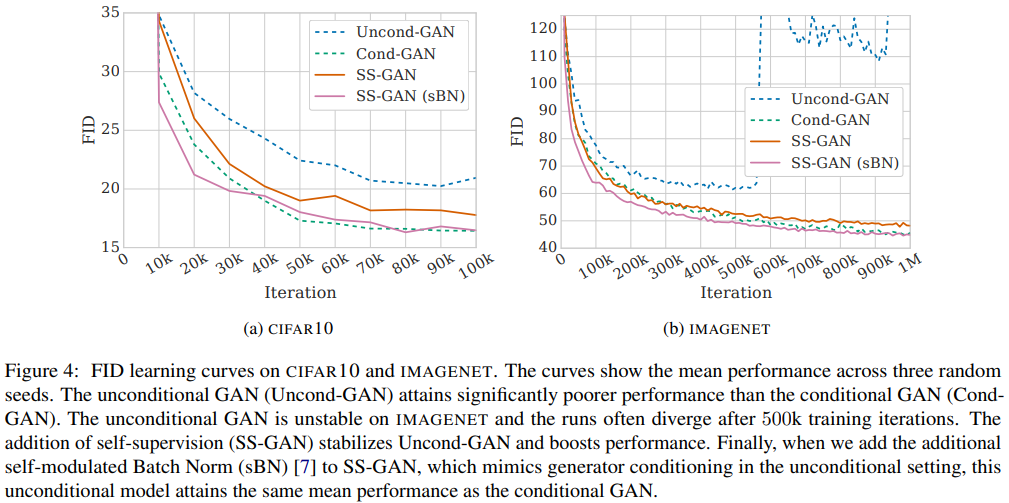

Experimental Results

- 학습은 생각보다 잘됨

- Unconditional-GAN보다는 훨씬 결과가 좋고, Conditional-GAN과는 비슷한 결과를 가짐

댓글남기기